Blog

What Are Adversarial Artificial Intelligence Attacks

By BUFFERZONE Team

Target: Consumers

Tags: Machine Learning, Deep Learning, Adversarial, Artificial Intelligence

As we enter an era dominated by Artificial Intelligence (AI), new vulnerabilities are exposed, and new threat landscapes evolve. One such threat is Adversarial AI. These malicious inputs into AI models are designed to deceive and lead to incorrect outputs. For instance, subtle changes to an image might be unnoticeable to the human eye, but it can cause an AI model to misclassify it [1].

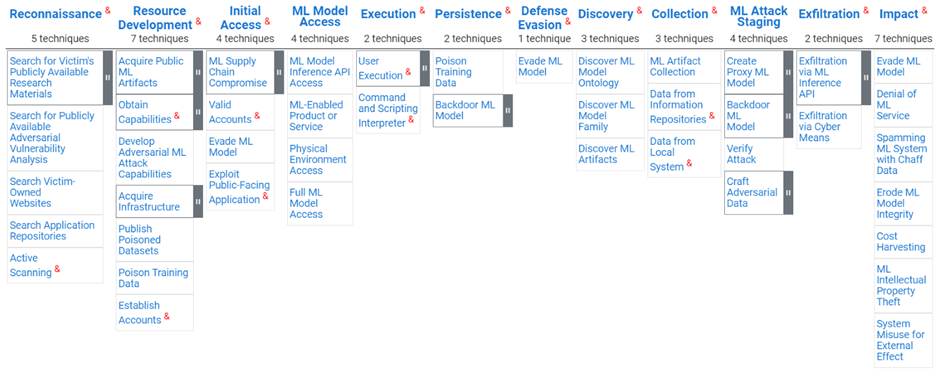

Governments and private sectors are realizing the importance of safeguarding AI tools, especially when malicious entities employ ML-enabled attack techniques [3]. A prime example is the “Adversarial ML (Machine Learning) Threat Matrix,” Microsoft’s attempt to catalog known adversary techniques against ML Systems [2]. MITRE ATLAS™ [4] suggests more progressed attack mapping. ATLAS is a knowledge base that documents adversary tactics and strategies based on real-world attack observations and scenarios tailored to cybersecurity and AI. The ATLAS Matrix displays how adversaries exploit weaknesses in ML systems, providing an inventory of potential vulnerabilities in ML models that attackers could target. This initiative assists researchers in navigating evolving threats to AI and ML systems, enhancing their security against adversarial tactics.

The ATLAS Matrix below shows the progression of attack tactics from collecting the reconnaissance, resource development, initial access, and different attack steps that may affect the ML model and its ability to detect or be exploited.

Figure 1. MITRE ATLAS [4] Attack Matrix

With the high popularity of AI model sharing (model’s ZOO) recently a new repository was released to provide reputation to AI model and present the model risk and to protect and limit the AI supply chain risks [5]. This step is an important milestone to secure AI models, and this is only the beginning. Soon we will see additional security controls to enhance model privacy contents and data leakage and advanced mechanisms to protect and secure AI models so they will be hardened against bypassing them.

How can we protect our organization against adversarial AI:

- Regularly Updating AI Models: Keeping AI/ML models updated ensures they can detect and adapt to the latest adversarial techniques.

- Input Validation: Ensuring the data fed into AI models is clean and verified minimizes the risk of adversarial attacks.

- Robust Training Data: Utilize diverse and comprehensive datasets to train AI models, making them more resilient to attacks.

- External Audits: Periodically review and audit AI systems for potential vulnerabilities.

- Use Tools like Adversarial ML Threat Matrix and MITRE ATLAS: Stay updated on known adversarial techniques and develop countermeasures.

Ensuring robustness against adversarial attacks is not just necessary; it is imperative in a world that is increasingly reliant on AI.

In our upcoming blog post, we’ll delve into the various types of adversarial attacks targeting AI models

References

[1] V. Fordham, D. Caswell, and A. Diehl, Securing government against adversarial AI, https://www2.deloitte.com/us/en/insights/industry/public-sector/adversarial-ai.html

[2] R.S.S Kumar, A. Johnson Cyberattacks against machine learning systems are more common than you think, https://www.microsoft.com/en-us/security/blog/2020/10/22/cyberattacks-against-machine-learning-systems-are-more-common-than-you-think/

[3] Tom Olzak, Adversarial AI: What It Is and How to Defend Against It? https://www.spiceworks.com/tech/artificial-intelligence/articles/adversarial-ai-attack-tools-techniques/

[4] MITRE ATLAS, https://atlas.mitre.org/

[5] Ericka Chickowski, AI Risk Database Tackles AI Supply Chain Riskshttps://www.darkreading.com/emerging-tech/ai-risk-database-tackles-ai-supply-chain-risks